In the first part of Applied AI I described how to use an RNN implemented in Torch to generate text by fine-tuning it on viking metal lyrics. That model is quite outdated, and there are many better ones around. In this post, I'm going to show you how I trained the superior model GPT2 on (now ex-)president Trump's tweets and made that into a Twitter bot.

The GPT2 Text Generation Model

One of the biggest leaps in machine learning text generation was the release of GPT2, made by Open AI. GPT2 - Generative Pre-trained Transformer 2 - is the first text generation AI whose results are actually sometimes completely human-like. So much so that Open AI didn't release the biggest variants of GPT2 to the public, in fear of malicious use - although I think that was in part a marketing scheme. The transformer model is quite different from previous models and is not really evolved from convolution or recurrent neural networks used in previous text generation models.

Another difference from RNN-based models is that GPT2 is huge. There are three versions of it, with increasing numbers of parameter: small (124M parameters), medium (774M parameters) and large (1.5B parameters). The bigger models take more time and resources (especially RAM) to fine-tune. Also: the bigger the model, the more text is required for the fine-tuning not to overfit, so bigger is not necessarily better. Overfitting is when your training makes the model too customized to your training data, which means it's actually going to be worse at generalized use. For a language generation model, overfitting usually means it doesn't generate texts in the style of the fine-tuning material, it starts to actually copy from fine-tuning material without modification. With somewhere around 1M, you're best off with the smallest model for instance to avoid over-fitting.

Fine-tuning GPT2

Fine-tuning GPT2 requires a corpus, a body of text that the generic model will be trained to specifically mimic. For the Trump Bot, I found a downloadable complete archive of tweets at The Trump Archive. I downloaded the archive and made a super simple converter from JSON to plain text - since GPT2 is fine-tuned on text. I also made a couple of simple manually exclusions, for instance removing retweets and tweets that were basically just mentions.

About at this stage in my project, one of Google's machine learning experts @minimaxir released a Google Colab notebook for GPT2 fine-tuning. Google Colab is a cloud-based machine-learning service that lets you use GPUs in Google's data center for free. So if you have a Google account, you can just make copy of that Colab notebook and use it to fine-tune GPT2 on your own corpus. He's described this in detail in his blog.

- Copy @minimaxir's Google Colab notebook into your own Google Drive.

- Upload your corpus to Google Drive as a plain text file.

- Follow the steps in the notebook and train the selected GPT2 model on your text. Since GPT2 is unsupervised you don't have to actually do anything. But keep track of the loss ratio. You would think that a low loss was good, but actually when the loss goes below 1.0 you're in risk of overfitting.

- Use your fine-tuned checkpoint to generate new text, and save it to a text file. By setting the temperature you can control if the result is going to be fairly human-like (t about 0.7) or creative and goofy (t at 1.0 or above). If you're training GPT2 on tweets, the generated text will mostly have rows appropriately long for a tweet each.

Make a Twitter bot

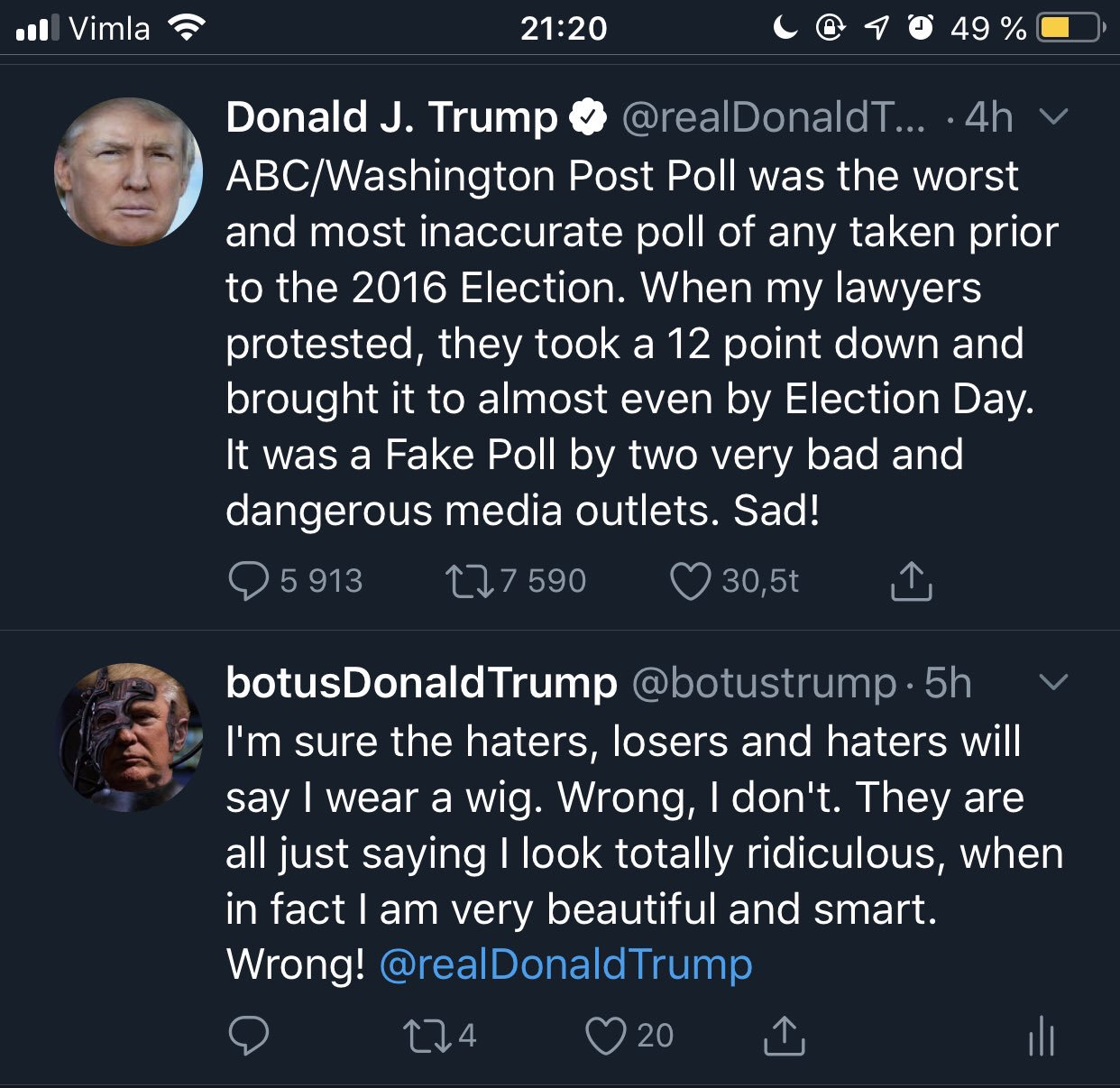

The Trump Twitter bot is an offline bot, in the sense that it's using pre-generated tweets rather than using GPT2 to generate tweets in realtime each time the bot makes a tweet. There are multiple reasons for that. One is that GPT2 is slow to generate texts, especially on a web server that usually doesn't have a GPU. Another, more important one, is that no text generation models consistently generate good content without human supervision. This is especially important when you are posting stuff on Twitter. On one occasion @botustrump got temporarily banned for posting content that got flagged even though I had curated all tweets by hand (which is slightly ironic considering that Trump got away with tweeting stuff a lot worse).

The easiest way of getting a Twitter bot up and running that uses your generated text is to just use my repo.

- Build docker file from source or get image here: https://hub.docker.com/repository/docker/osirisguitar/botus-twitter

- Create a volume for /app/data

- Put a file called tweets.txt containing one tweet per line in /app/data

- Put meaningful values in environment variables:

- CONSUMER_KEY - from your Twitter app (yes, you need to make one)

- CONSUMER_SECRET - from your Twitter app

- ACCESS_TOKEN - from your Twitter app

- ACCESS_TOKEN_SECRET - from your Twitter app

- CRON - cron run pattern (i.e. * */4 * * * for every fourth hour)

The running container will randomly select one row from the text file tweets.txt, tweet it, remove it from tweets.txt and finally add it to the JSON file called tweeted.json along with the date it was tweeted. When tweets.txt has been emptied, it will stop tweeting. This also means it will never post duplicate tweets (unless there are duplicate lines in tweets.txt).

Wrap-up

@botustrump was active from September 2019 until January 20th 2021, when the real president Trump was replaced in the White House.

Why did you select Trump for this experiment? First of all, I didn't like Trump's presidency very much, even though I don't live in the US. Second, few others have such a distinct writing style in social media that would be instantaneously recognizable in a bot.

Isn't hand-picking parts of the generated contents cheating? Well, you wouldn't be alone in thinking that. There was a bit of a shit storm when I posted a link to the bot on Reddit. I don't agree though. Like I've mentioned before, most machine learning experts (like Max Woolf) agree that no text-generation model will generate consistently good content unsupervised and that most of the "I made a bot watch 5000 hours of x"-showcases are either fake or heavily curated by humans.

Isn't a bot using content generated at high temperature a bad showcase for AI? Maybe, but I didn't make this bot to showcase GPT2. I did it for the lulz.

The Applied AI Series

The first part of Applied AI was about making a text generating service with Torch RNN.

The third part, coming soon, will be about how to live-generate content using GPT2.

If you liked this post, or @botustrump, please comment with your favorite examples of its tweets!